25 days into 365 days of AI

So far I’ve been creating something with AI for 25 consecutive days. In my first 10 days, I mainly asked ChatGPT to help me build specific apps based on my own ideas. I wanted to understand the extent to which ChatGPT could help me build software. I would say things like, “help me make a web app that does x” or “write a flask app that does y”. I was supplying ideas and questioning things, and ChatGPT was working on them.

More recently, I started experimenting with letting ChatGPT take more of the lead in generating ideas itself. Instead of providing my own ideas, I focused on communicating the kind of outcome I wanted to achieve. For example, I would ask ChatGPT to “help me generate ideas for software tools that could help me work faster”. If one of ChatGPT’s ideas seemed interesting, I would ask ChatGPT to build it.

As the days went on, my experimentation also expanded into making things with other AI based tools. Doing things like, transforming voices using AI audio models, and some initial experiments with Midjourney, RunwayML, and D-ID.

You can review everything I create on a daily basis on Futureland in a journal titled, 365 days of AI. In this post, I will focus on highlights and reflections.

The Productivity Wheel

Around Day 14, I leaned in to asking ChatGPT to generate ideas for tools and games that could help me work faster. I asked it to integrate the pomodoro technique into its ideas, which is a method of focusing that involves setting a timer and working on a single task for 25 minutes.

The first idea that ChatGPT came up with was, “The Productivity Wheel”. A paper based game where you would write all of your important tasks on a wheel and then spin it. Whatever task the wheel lands on, is what you need to work on for 25 minutes.

I thought this was an interesting concept, but I did not want to use or make a paper based version of this game. So I asked ChatGPT if it could turn the idea of “The Productivity Wheel” into a web app. It replied, “Certainly!” It took a little back and forth, there’s still some quirks, and the design needs improvements, but ChatGPT was able to complete a working version of “The Productivity Wheel” very quickly, like in a few minutes we had something.

The Productivity Wheel is not a tool I use right now, but I could see myself using something like this in certain situations. I think I could take some mechanics from The Productivity Wheel and apply it to other situations.

For example, in a conversation with someone, I could put several subjects on a wheel and then spin it. Whatever subject the wheel lands on, we would discuss for 25 minutes, then take that subject off the wheel. I could apply the same mechanics to my workouts, spinning a wheel and doing whatever exercise it lands on. Or I could use it to decide where to go out for dinner on a Friday night, whatever restaurant the wheel lands on is where we will go. It might also be a fun mechanic to integrate into software like a to-do list app or a habit tracker app. In the case of a to-do list app, perhaps the user could push a button like “Spin” or “Feeling Lucky” and the interface would sequentially highlight tasks from top to bottom and then bottom to top, starting fast, at first, and then slowing down until it stops on a task that I must complete. This can be helpful in situations where I have a bunch of tasks to do but can’t decide what to work on first.

All of that being said, I could also just feed a set of options into ChatGPT and have it choose an option for me at random. And that’s something I keep struggling with as the days of this experiment go on. I don’t know what tools I need any more. ChatGPT, and technology like it, can do so many things. It feels like I’m very far way from understanding its limits in terms of how it can play role in my daily life and creative process.

I discussed all of this with ChatGPT and we felt like it might be worth branching out and experimenting with other tools to understand what else is happening in the space of AI.

Experimenting with AI Voice Models

On Day 16, I started experimenting with AI voice models. I came across a tool called so-vits-vc, which is designed to convert signing voices into other voices while preserving the original pitch and intonations. By using advanced AI models and technique, it can generate the transformed voice to sound like a specific person or character, such as Kanye West or a fictional character.

For example, you can use the tool to transform a recording of your voice into Kanye’s voice, or use the tool to transform a song by Drake to sound as if it was performed by someone else.

At first I just tried to get it to work with only model I had access to, which was Kanye’s. I took an acapella of Drake’s track “Jungle” and used the tool to adjust the recording to sound as if it was performed by Kanye West.

Once I understood how to convert a voice, I tried to make a full cover track. I created an AI cover of Travis Scott & Pharrell’s “Down In Atlanta” and Gangstarr’s “Mass Appeal”, both now performed by an AI version of Kanye West.

It was fun to play with this tool, but I don’t know exactly what to make of it yet. Using a tool like this feels groundbreaking because it gives you an entirely new and powerful ability, while also being very simple to use. What I mean is, these voice-based AI models are likely leading up to a complete transformation of how vocals are recorded and performed in music, and even how music is produced entirely, yet they are so simple to use and gain access to.

It reminds me of when I first started using Napster. It felt like such a crazy thing to be able to download any track I wanted to so easily. My friends could do it too, and because of that, we discovered so much new music together. But what was also interesting was the remixes that started to arise as well. People would take an acapella from one rap track, which was now easy to find with Napster, and put it over a new instrumental, which was also now easier to find because of Napster. Seeing mashups like this encouraged me and other listeners to edit the music we were listening to into something new.

Then when I first got Kazaa, which was like Napster, but also enabled you to download videos, I started to discover new mashups of video and audio that would usually never mix. For example, combining electronic music like, Da Rude’s Sandstorm with a video of Goku from Dragon Ball Z transforming into a Super Saiyan. This kind of remixed media is extremely common now, but at the time, it was groundbreaking and offered a glimpse into the future of multimedia content.

These AI voice models and other AI based tools feel very similar to my early experiences with Napster and Kazaa. It feels like we are watching new kinds of media combine for the first time in unique and interesting ways, foreshadowing the types of combinations and remixes which will become very common very soon.

Exploring AI in Film, Visual Effects, and Avatars

On Day 19 and 20, I ran some simple experiments with Midjourney, an AI-powered tool that generates high-quality images based on textual prompts and descriptions. Midjourney is very easy to use, and the quality of images you can generate with it is unreal.

In the same way I struggle to understand the full implications of the voice conversion models I used, I don’t know what to make of Midjourney either. This is a recurring theme through this experiment, sensing a huge shift, but being unable to comprehend all of the implications.

I have spent time working in film production and one of the first steps of developing a concept, say for a music video, is to develop a creative treatment. In the treatment you provide visual references to communicate the way you will express things visually. These references can come from different places like other films, or .gifs on the Internet, other music videos, magazines, they can come from any where really, but they are never newly generated visual references.

Doing that would require hiring a concept artist who can work with the director and producers to understand the vision of the project, and then put in the time and effort to generate an array of visual concepts that can be discussed, narrowed down, and then further refined. This can be an expensive process, but now with tools like Midjourney, all visual references in a treatment can be newly generated visual concepts.

Sometimes in an attempt to express a new visual style, a filmmaker may use existing visual references, and then use words to try and explain how the existing visual reference may be modified into something new. Or the filmmaker will take the existing visual reference and try to modify it in some way to communicate a new style they are trying to achieve. These are never perfect descriptions of the new style and they require the reader of the treatment to use their imagination.

Now, new visual styles or approaches can be easily and clearly expressed through tools like Midjourney which can rapidly generate endless new styles with the correct prompts. This allows filmmakers to act on new remixed genres, stylistics mashups, and entirely new creative approaches with less risk because they can see the potential outcome in high fidelity using Midjourney before investing more time and money into the new style.

That being said, the generated images used in a creative treatment would need to considered the team and budget of the project. The images from Midjourney can be so high level that now it is easy to produce world class visual concepts for any project, but executing on those ideas may be beyond the abilities of the team and budget of the project.

Of course, all of this assumes the role of the filmmaker stays the same as it is today, which is to orchestrate individuals through a multifaceted process in the pursuit of bringing an idea to life through moving images. However, the role of the filmmaker, or the make up of a team working on a film, may be further transformed if tools like Midjourney can effectively take prompts and turn them into captivating moving images with stories that resonate with viewers.

On Day 21, 22, and 23 I started experimenting with a tool called Runway. It is difficult to narrow this tool down into a single description. On their website, they describe Runway as “a new kind of creative suite”. While using it so far I would say it is something like if Final Cut Pro was built from the ground up to be used in your browser with AI based functionality built right into its core design. That’s what it feels like to me so far any ways.

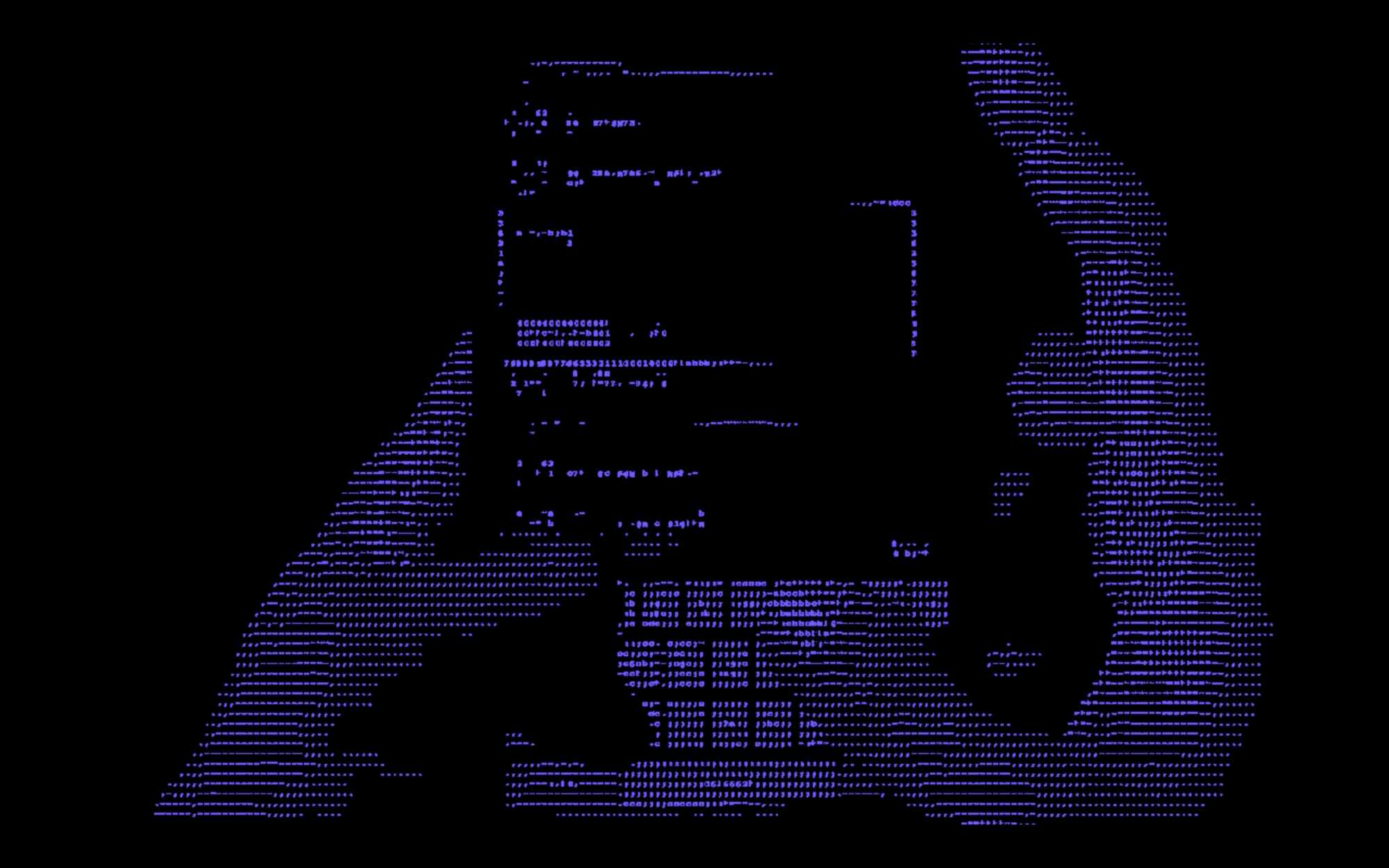

I started by applying various effects to existing overhead videos of me using Futureland that I shot a few months ago using a Sony FX3. These effects ranged from claymation, sketch, water colour, and ascii. Most of these styles are interesting, but I was not able to figure out how to control and modify these effects in a way that achieved a useable outcome. A useable outcome in this case would be one that retained the core focus of the original video, which was the software interfaces being displayed on the iPhone’s screen.

I did however really enjoy the aesthetic of the ascii effect. I thought it was unique and pleasing. It’s going to be interesting to observe the evolution of these effects as they get better and better.

I think it’s very powerful to be able to record a video and then apply a creative effect to that video that entirely transforms its appearance, adding rich new details, while retaining the smoothness and unique characteristics of the original recording. This reminds of the AI voice model I used earlier in this post. In terms of video and audio production, it seems like the most compelling outcomes are ones where human performance is blended with something generated by AI.

On Day 24 and 25 I started experimenting with AI avatars which were created using a D-ID. The software is extremely easy to use. You simply upload a picture with a character in it or choose from an existing avatar. Then you can write text, or upload your own voice, and the avatar will either perform your text input or your vocal input.

I ran these tests very quickly.

In the first one I took an image that I generated from Midjourney and used it as the avatar. Then I uploaded a recording of me saying, “I see everything interconnected, all at once”, and generated a video with that voice recording.

The thing I found kind of surprising about the video that D-ID generated was that, not only did it make the character blink and move its head effectively, but it also gave the character teeth when he opens his mouth, which was not part of the original image. I just didn’t expect to see that.

In my second test, I took an image I generated with Midjourney, which was inspired by recent Balenciaga videos by demonflyingfox, and gave it an audio clip from the Kanye West AI cover of the song Down In Atlanta that I created earlier. I should have stripped just the acapella of the song before giving it to the avatar, so its performance was smoother, instead I was in a rush and I fed the avatar the entire track. I thought the result was kind of funny because the avatar is voicing the beat of the song and the lyrics. I did the same thing with Kanye West’s AI cover of “Mass Appeal” that I created earlier.

There are better examples of these avatars, but I think these are interesting results considering how easy it is to create one of these talking avatars. It’s weird to think that all of this is going to keep getting so much better. I imagine it’s going to be more and more common for us to be having rich interactions with non-human avatars.

Continuing to explore

My research is still at a stage where I am just tinkering with these tools to understand what’s possible. I imagine this will continue to be the case for awhile.

Outside of my daily and continual use of ChatGPT, I am not using other AI tools to execute complex creative projects or enhance my creative process yet. Some of these tools still feel disjointed from my daily work flow, I don’t know exactly what to use them for yet, but I think it’s obvious that as this technology continues to develop, this will surely change.

It’s already pretty clear that these technologies are going to shape the future in many ways, especially in terms of how we relate and interact with computers, and how much more they contribute to the creative process and our daily lives.